Cloud-Native System Performance - Better Network Performance 🕸️

Not just cloud-native, network and the internet have always been contributing factors for introducing latency in the system performance. A system that resides in one continent always appears to be slow for its users residing in a different continent.

Underwater cables have tremendously helped overcome the distance, but on land, a complex network of DNS servers, routers, ISPs, etc., always introduces those additional hops for any internet-based business.

As far as an organizational intranet is concerned, they seem to work a bit better on-prem. But given the COVID pandemic, we have seen a tremendous change in the way they operate in the last couple of years.

The network architecture of any system is more than a backbone of anything we do in Tech. I personally have great respect for folks who build and design networks and keep an understanding of the same in the general sense of the word.

In this post, we discuss various challenges and cloud-native approaches to improving networking performance. This is part of the series “Cloud-Native System Performance,” where I cover some of my approaches to enhancing a system deployed on the cloud. If you haven’t read them yet, please find the links to them below:

Cloud-Native System Performance - Better Approaches To Storage & Memory

Cloud-Native System Performance - Improving Data Transit Through Network (This post)

I have compiled this series into a FREE eBook with more details and deeper insights. Link below! (PDF & ePub formats)

Top 5 Challenges

Aspect

Challenge

Resolution

Network hops

Users experience high latency in application response time due to geography.

In a client-server architecture, if clients and servers are located far away, and the requests have to travel via the internet, then this is a commonly observed behavior.

For the request to travel via the internet, it makes a number of hops via routers, ISPs, firewalls, etc. Every node it goes through introduces some latency. Of course, it also takes some time for the request to travel the distance between the nodes considering the medium used.

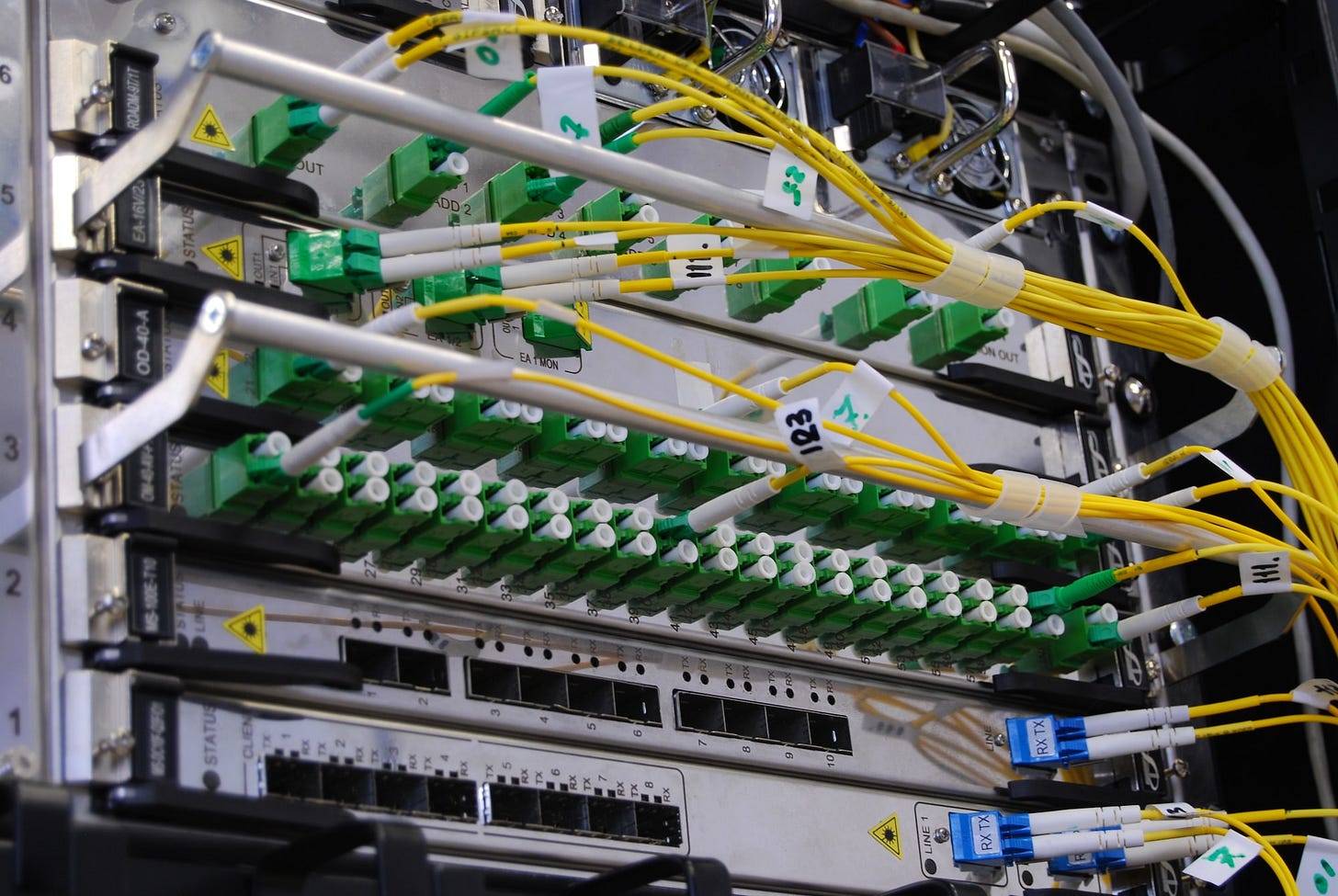

There are a number of things that can be improved to address this. First, let us talk about the medium. For the internet-based application, the medium of communication is rarely in our control especially when users are dispersed globally; however, if we manage to connect the users using the latest communication technologies like fiber optics and 5G, then it can help reduce the latency to an extent but not completely.

However, there are other things that can be leveraged fairly easily. Consider using CDNs to distribute static content files which make up the frontend UI for the web application. This helps reduce the number of hops to serve these files drastically since the static content is cached at a location that is closer to the users.

Organizations typically establish VPNs to connect their global locations distributed geographically directly. This dedicated line only allows the flow of the organization’s traffic so that their employees and users can access the internally hosted applications at a faster pace.

Network bandwidth

Low network speeds slows down the web application and is left in a “loading” state for a long time.

This is a very straightforward issue, and one of the obvious solution is to allocate more network bandwidth to the connection.

Although the solution seems trivial, and of course, there are other ways like compression to help address this - the issue being highlighted is different. Often allocating more bandwidth is associated with additional costs - something that everyone is quite sensitive about.

Paying additional costs for 24 hours for something that users may only use when they need it does not seem a very wise solution to be adopted.

This problem is not specific to the client or server, and it can occur on any end of the system. Controlling the network bandwidth on the user's end is simply impossible. However, on the server-side, organizations may consider continuous monitoring of requests and allocate optimum bandwidth for the serving requests flowing in, during peak hours.

Encryption

Encryption causes latency in delivering the content.

Encryption of data in transit is a security topic, and one should absolutely not compromise on that just for better performance.

Web services that are used via the internet typically expose REST APIs to exchange data. These APIs use the HTTPS protocol and may transfer sensitive data through the network.

To enable secure HTTP communication, encryption protocols like SSL and TLS are used. The client and server typically exchange and use keys to encrypt the data while sending and decrypt the data when received.

The encryption and decryption thus consume compute resources on both ends and may affect the performance - which is still okay as far as the internet is involved.

However, when a service is said to be hosted on a secured network and infrastructure, we can terminate this behavior using SSL/TLS termination proxy to avoid this additional compute required. Even if we use HTTPS for communication on the internet, consider using HTTP internally for communication between the services.

Communication Protocol

Inter process, inter-service communication adds to the latency.

REST APIs and HTTP/HTTPS protocols are great when used over the internet. One of the specific purposes they serve is security and communicating with the applications running on completely different systems and hardware.

However, as discussed in the previous point, if the communication happens internally i.e., between services hosted on the same system or between microservices hosted on containers, consider using communication protocols with lighter consumption. An example of this is RPC.

Inter-process communications is another example of communication that facilitates lower-level communications which are faster as they do not rise up the layers.

Compression

Large data packets, and object sizes in transit make the system unnecessarily slow.

We use the network to send and receive data. The size of the data directly impacts the delivery of services to end-users.

Applications running on the web browser of the user's device should make sure only essential data is communicated. Extreme measures are taken to qualify a certain data entity to be communicated, just to reduce the packet size.

The smaller the size of the object being sent, the lower the network bandwidth consumption, and thus compression comes into the picture. Compressing the data before sending it improves the delivery.

Of course, compression and decompression take their toll on compute. However, the trade-off still makes sense. A general rule of “compute over data” that should be adhered to.

Read about more challenges in FREE eBook, link below! (PDF & ePub formats)

Top 5 Cloud-Native Approaches

Feature/Service

Approach

Backbone network

As far as networking is concerned, well-established Cloud-Native platforms have data centers and server farms in various locations spread across the globe. It doesn’t matter where the traffic is coming from. The probability of these cloud providers having a data center available in their vicinity is high.

These data centers are connected with a backbone network which is also developed by the cloud providers that are dedicated to their communication.

This is a great advantage. If the application users are present in multiple continents, it is easier to accelerate the delivery of your services - thanks to these backbone networks. In addition, to this, having a data center close to users has other advantages as well in terms of hosting of applications and databases, storage, caching mechanisms, etc.

The cloud-native architecture makes it very easy to deploy and scale across regions and inherently improves the performance.

API Gateway Caching

Like other services, cloud providers provide services for hosting and configuring API gateways for your applications. Today, APIs are the first choice of interface for any web application.

API Gateways not only help develop these API paths, but they are also seamlessly integrated with other services related to compute, storage, databases, functions, containers, orchestrations, queues, etc. The rich Web UI makes it easy to configure API Gateways and maintain versions of the same.

Since API deals with sending and receiving payload data, there are Several pre-built features that assist in formatting, encoding, and security of payloads. Since these advanced features are pre-built in these API Gateway services, the efforts required to configure these for optimum delivery are low.

Leverage these features for automatic compression, decompression, classification, and route requests to target service, enable cache to improve latency of application response.

CDNs

Considering the fact that cloud providers offer their own backbone network that carries dedicated traffic between their data centers, it becomes very convenient for them to provide CDN services as well.

Major cloud platforms offer CDN services that give really good results as far as content delivery of static data is concerned. Web applications being served to users via Web Browser typically use HTML, CSS, JS files to build the interactive user interface. These files are fairly static and do not change very frequently.

While applications are being deployed on cloud platforms, it makes sense to take advantage of CDN services to improve the network performance of these applications.

Direct Connections

Traditionally, enterprises hosted their own servers on their premises. They essentially had their private data center maintained by themselves. With cloud computing, organizations found it crucial to reduce this maintenance overhead and started migrating their workloads to the cloud.

However, there are still some business-critical applications and data. They are still not sure about moving to the cloud. Since these applications need not be completely independent of workloads that are already moved online, there is a need to connect the two islands.

Establishing a direct connection via the internet causes two prime issues - security and network reliability. The reliability directly impacts performance in ways that cannot be forecasted.

Cloud providers readily provide this ability to connect to their network directly from the designated premise. This facilitates a dedicated and secure connection between on-premise services and the services hosted on the cloud - thus improving the performance of hybrid systems.

Choosing the right load balancers

Load balancers are typically classified as a network load balancer (NLB) and application load balancer (ALB). The primary differentiator between the two is that NLB operates on layer 4 (TCP and UDP) while ALB operates on layer 7 (HTTP).

This difference also means a world of differences in terms of their behavior and performance. ALBs “understand” several HTTP-related attributes and headers and is able to base their routing decisions based on this information.

NLBs on the other hand, work on smaller sets of information being dealt at TCP/UDP layer. This native behavior enables NLB to churn out the best performance as far as load balancing is concerned.

Typically, ALBs are used internally - meaning a private network of microservices - to distribute the load based on incoming request attributes. NLBs are used to receive requests and distribute them from the public network.

Additionally, consider analyzing logs generated from monitoring the traffic flow via these load balancers. These logs can provide insights related to request congestion, which are responsible for slowing down the system.

I have compiled this series in a FREE eBook with deeper insights, link below! (PDF & ePub formats)